The Evolving Landscape of AWS Storage: New Technologies and Endless Possibilities

The cloud storage arena is constantly buzzing with innovation, and AWS, ever the industry leader, keeps breaking boundaries with its impressive lineup of storage solutions. Galaxy’s AWS technical expert’s transform traditional object storage to groundbreaking serverless and AI-powered options, let’s dive into the ever-evolving landscape of AWS storage and explore the exciting new technologies shaping the future of data management.

Beyond Buckets: Serverless File Storage with Amazon FSx

Galaxy offers AWS Storage / File Systems as fully managed, serverless file storage solution that scales seamlessly and delivers the performance and functionality of popular file systems like Windows File Server and Lustre. With FSx, you can create secure file shares in minutes, eliminate infrastructure management headaches, and focus on building innovative applications.

Object Storage on Steroids: Introducing Amazon S3 Glacier Instant Retrieval

Object storage on S3 just got even faster. Glacier Instant Retrieval lets you access archived data stored in the Glacier storage class directly, with retrieval times as low as 1 millisecond! This game-changer eliminates the need for complex data lifecycle management and opens up exciting opportunities for cost-effective storage of infrequently accessed data, while still enabling instant access when needed.

AI Takes the Wheel: Amazon S3 Object Lambda and Personalize

Infuse your storage with the power of machine learning. S3 Object Lambda allows you to trigger serverless code directly upon object creation, deletion, or any other event within your S3 bucket. This opens up a world of possibilities, from automated data analysis and transformation to triggered workflows and even personalized content delivery with Amazon Personalize.

High-Performance Block Storage Gets Even More Granular: EBS Nitro Volumes with IOPS Tiers

For applications demanding extreme performance, EBS Nitro volumes get an upgrade. The new IOPS tiers let you fine-tune storage performance to your specific needs, paying only for the IOPS you require. This translates to significant cost savings while ensuring your applications have the precise level of storage performance they need to thrive.

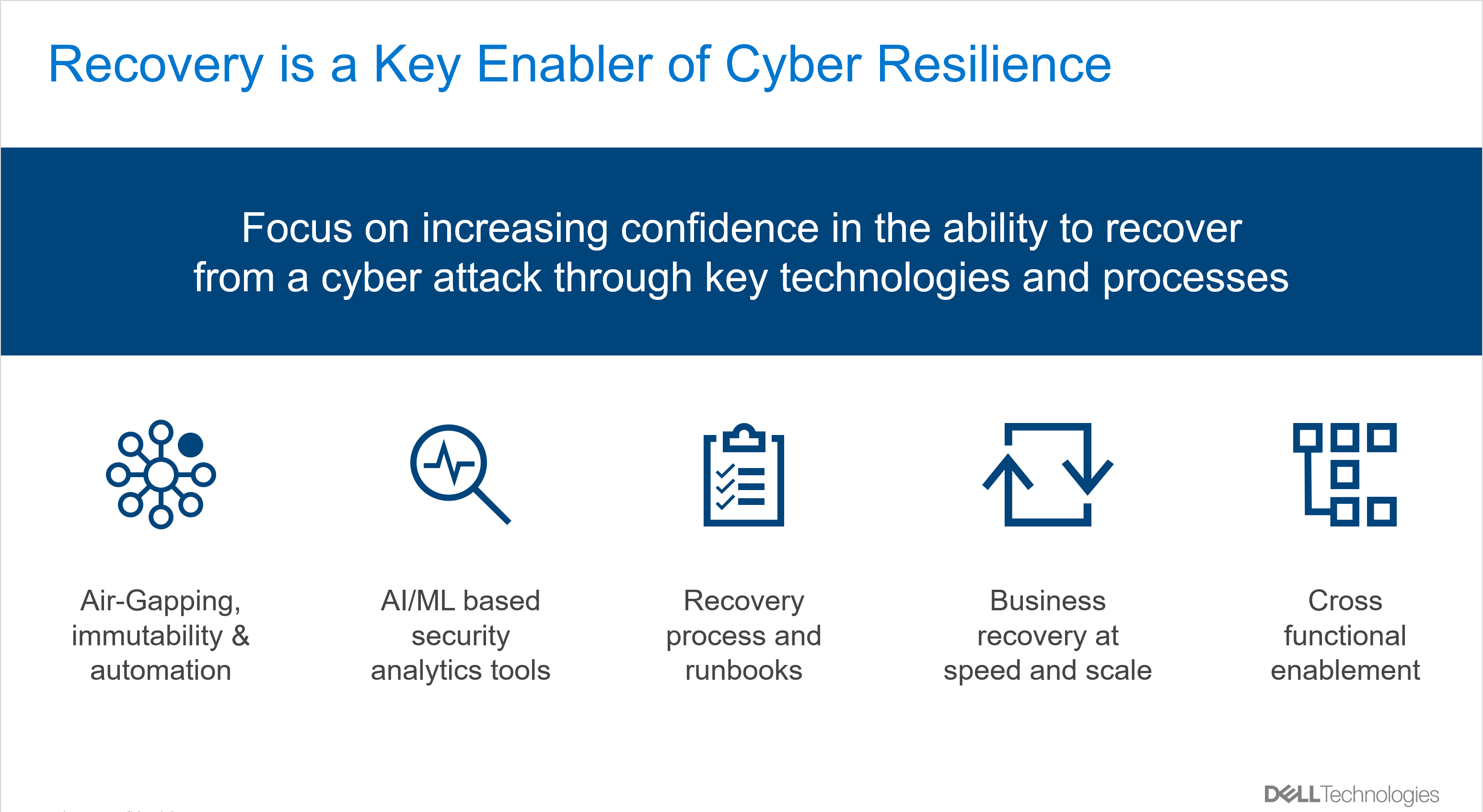

Security First: CloudHSM with AWS Transit Gateway

Data security is paramount, and AWS doubles down on this commitment with CloudHSM and AWS Transit Gateway. CloudHSM provides dedicated hardware security modules for managing encryption keys within your VPC, while Transit Gateway enables secure connectivity between your on-premises network and multiple AWS accounts and VPCs. This powerful combination ensures high-assurance data protection wherever your data resides.

The Future of AWS Storage: Endless Possibilities

These are just a few highlights of the exciting innovations driving the evolution of AWS storage. As AI, serverless computing, and edge computing continue to mature, we can expect even more groundbreaking technologies to emerge. From self-healing storage systems to data lakes powered by machine learning, the future of AWS storage promises boundless possibilities for building scalable, secure, and cost-effective data solutions.

Why Choose Galaxy?

- Expertise You Can Trust: Our team of certified cloud architects and engineers are passionate about the cloud and possess deep expertise in all things AWS, Azure, GCP, and more.

- Holistic Approach: We go beyond mere migration. We work with you to design, implement, and optimize cloud solutions that align with your unique business goals and challenges.

- Cost Optimization: We understand the importance of making the most of your cloud investment. We optimize your infrastructure, leverage cost-effective solutions, and help you avoid cloud bill surprises.

- Security at the Core: We prioritize security in everything we do, ensuring your data and applications are protected with the latest cloud security tools and best practices.

- Agility and Scalability: We build agile, scalable cloud architectures that adapt to your evolving needs and empower you to seize new opportunities with ease.

- 24/7 Support: We’re always there for you, offering ongoing support and guidance to ensure the smooth operation and continual optimization of your cloud environment.